Categorizing and Communicating Usability Problems

Little attention has been given to the way in which usability results— the actual categorization and measurement of the problems discovered through an array of usability evaluations— are communicated. Common practice indicates that most usability practitioners organize the usability results they identify by (1) category or attribute of a problem and (2) severity. Unfortunately, there is little agreement among practitioners on which list of categories is the most comprehensive and which severity scale is the most appropriate. The most common response, of course, remains “it depends.”

Regardless, the way in which we communicate usability results is paramount to helping development teams make appropriate decisions that will positively impact the design of a product. This blog compares three readily available categorization methods in order to explore the different challenges I’ve experienced when deciding how to organize and communicate usability results.

Overview

After conducting a usability evaluation, such as a usability test or heuristic review, usability problems are categorized to help researchers and development teams better understand the results of the research and to prioritize actionable solutions so that resources are allocated efficiently and effectively. Three popular methods have been proposed by (1) Nielsen, (2) Dumas and Redish, and (3) Rubin & Chisnell. Each method proposes a severity scale and a method for categorizing, or “bucketing,” usability problems.

Severity Scales

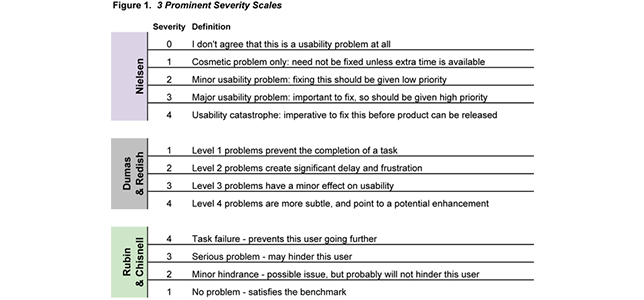

Many different severity scales exist, and few are alike. Individual corporations and development firms often develop their own or adapt existing scales to fit their product or business needs. The development of a severity scale can provide a good opportunity for everyone to buy into the process of identifying and prioritizing usability problems. The three scales discussed here are shown in Figure 1.

In general, there is a lack of consistency and agreement among existing scales. Some methods use three levels, others use four, and some five. Similarly, some scales propose that the lower number indicates a more severe problem, while others suggest that the higher number indicates a more severe problem.

Does it matter if the scales used are different? Does the field of human factors, as a profession grounded in behavioral science, require consistent severity scales for improved reliability across usability tests? While these questions are important and often debated among human factors professionals, the reality is, if development teams understand the ratings and agree with how problems are rated, then recommendations will most likely lead to product improvements.

We recently experienced a similar situation while conducting usability tests for a client. We decided to use a scale comparable to the one recommended by Dumas and Redish (Figure 1) because it was not as stringent as other examples and allowed us to define severity for a broad range of usability problems. We also decided to get rid of the fourth level because our client wanted to focus on problems that we would recommend spending resources on now, not later.

Categorization

In addition to the severity scales, all three methods provide a list of attributes that help describe the problems that are identified during assessment. Nielsen, for example, provides (1) the frequency of occurrence, (2) the impact of the problem or the ability of users to overcome it, (3) the persistence of the problem, and finally (4) the market impact or the effect on sales or popularity in the marketplace (2006). I recommend using categories that are logical and easy to understand, but also relevant to the product and the particular user needs.

Unfortunately, there is no indication in the available literature for how to apply problem attributes to severity ratings. Which factor, for example, is given mor priority: frequency, impact, persistence, or market impact? Many of the proposed categorization systems face a similar problem. The ambiguity of the relationship between categorization attributes and the severity ratings might lead to conflicting assessments by different evaluators.

Frequency. Frequency is an important attribute to consider, yet several of the proposed systems fail to mention it. The frequency of a problem is often difficult to define when conducting usability tests with only three to five users. More often than not, usability experts will estimate the frequency of a problem, both for the number of users that will experience the problem and for the number of times a user will experience the problem during a single use of the product. For this purpose, we often leave frequency out of our findings unless we believe it’s going to be a real problem.

Scope. According to Dumas and Redish, the scope of a problem is defined by its impact on other parts of a system. If a problem is local, it has a small or limited impact on the usability of the rest of the system. If a problem is global, however, it will negatively impact the user’s experience with the rest of the system. Global problems will have a larger impact on the product’s usability, but may also be more challenging, and in some cases impractical, to solve.

Business goals. One concern with the three categorization methods discussed is that they do not adequately incorporate business goals into the fabric of their rating systems. Nielsen comes close by including market impact, but even this inclusion seems secondary to the other factors mentioned. Furthermore, there is no indication of how much weight the market impact holds relative to frequency, impact, and persistence. We often consider business goals in order to show how usability results can contribute to a company’s bottom line.

It’s important for evaluators to understand the relative value of each attribute so that they can take this into consideration when assigning severity to the problems they uncover. If possible, include the development team in the process of defining severity so that everyone has a better understanding of why certain problems take precedence.

Communicating Problems

There are excellent guidelines available to help evaluators communicate consistently useful and usable research findings. Molich, Jeffries, and Dumas provide a practical list for communicating problems that will positively impact the usability of a product (2007, p. 178). In addition, there is growing discussion among usability practitioners that the language used to report usability problems should be more positive and tactful. Others advocate a more positivistic approach, one that focuses on articulating good design principles, rather than simply pointing out design mistakes.

It’s easy to see why there is often push-back on the results of usability tests (nobody likes to be told why their solution doesn’t work), but it’s important to remember that the goal is the same for everyone on the development team: to constantly improve the products we design. Effectively communicating usability results is one step towards designing better products.

References

Molich, R., & Dumas, J.S. (2008). Comparative usability evaluation (CUE-4). Behavior & Information Technology, 27(3), 263-281.

Molich, R., Jeffries, R., & Dumas, J.S. (2007). Making usabilty recommendations useful and usable. Journal of Usability Studies, 2(4), 162-179.

Nielsen, J. (2006, December 19). Severity ratings for usability problems [web log message]. Retrieved from http://www.useit.com/papers/heuristic/severityrating.html

Dumas, J.S., & Redish, J.C. (1999). A Practical Guide to Usability Testing (revised Ed.). Portland, Oregon: Intellect Books.

Rubin, J. & Chisnell, D. (2008). Handbook of Usability Testing: How to Plan, Design and Conduct Effective Tests (2nd Ed.). Indianapolis, IN: Wiley Publishing.

Wixon, D. (2003). Evaluating usability methods: Why the current literature fails the practitioner. Interactions, 10(4), 28-34.